Since 2003, Hermes has been a cluster of meaty PCs in various configurations. The cluster is divided into the following groups of machines:

The Cyrus machines comprise the message store, and are named after the software that they run. Each machine stores the email for a few thousand users; the machines are paired, so that each machine is the primary server for half of the users and the backup server for the other half. Our customized Cyrus continuously replicates email from its primary server to its backup server.

PPswitch has a number of jobs. It acts as a front-end to the Cyrus machines, hiding the fact that there are many of them. Under the names pop.hermes.cam.ac.uk and imap.hermes.cam.ac.uk it runs a POP and IMAP proxy which accepts connections from users and relays them to the correct Cyrus server depending on the user. It is also the University's central SMTP relay. It accepts incoming email via mx.cam.ac.uk and outgoing email via smtp.hermes.cam.ac.uk (from Hermes users) and ppsw.cam.ac.uk (from others). Email is scanned for spam and viruses, and delivered to the appropriate Cyrus machine, or department or college email server, or to other sites across the public Internet. PPswitch is named after the software it ran back in the dark days of the JANET coloured book protocols; it has run Exim exclusively since 1997.

The machine that acts as hermes.cam.ac.uk itself provides a timesharing service accessible via ssh, which allows people to run Pine to read their email or use the menu system to manage mailing lists or virtual domains. It also runs the webmail server - which is what the vast majority of our users use to read their email. We actually have two such machines, one which acts as a warm spare for the other.

Similarly, we have a pair of machines which run the transport side of the mailing lists service. This used to run on ppswitch, but has been moved to its own machine (plus a warm spare) in order to support Mailman, which cannot be clustered like the old lists system.

The remaining machines include our admin server, which hosts our source code and configuration repository, our automated install server, and which keeps the configuration tables on the other machines up-to-date. There is also a backup server, which keeps a third replicated copy of everyone's email on disk and spools it to a fourth copy on tape; it also backs up the admin box and our workstations, etc. We also have a machine which hosts exim.org, and one or two test/development boxes.

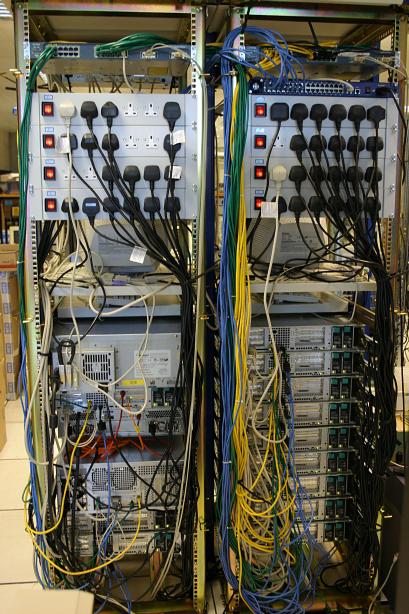

You can see most of the above in this picture from November 2005:

The four upper machines on the left are ppswitch. Each machine has 70GB of battery-backed RAID disk for the Exim spool (that's the formatted capacity), 3GB RAM, and dual 3.2GHz Intel Xeon processors. These machines have quite a lot of CPU because they do a lot of cryptography (TLS for IMAP, POP, and SMTP), and scanning email takes a lot of work.

The large machine underneath is one of the second-generation Cyrus machines. We will gradually be replacing the older machines with several like this one. It has 3.5TB of storage in the form of sixteen SATA2 disks in a battery-backed RAID. Again, 3GB of RAM for IMAP server processes and to act as a disk cache. Only one CPU because it only has to move data around.

The bottom 8 machines in the right-hand two racks were the first machines in the Cyrus cluster. Each machine has 7 72GB disks for a 350GB formatted capacity. The new machines are ten times bigger.

The top six machines include the primary hermes.cam.ac.uk + webmail server, our admin server, exim.org, the primary lists.cam.ac.uk server, a test/development box, and a machine belonging to another part of the CS.

The second part of Hermes is in a similar pair of racks in another part of the machine room. The left hand rack contains the second set of first-generation Cyrus machines.

Under the right-hand console is a 4U tape robot containing 2 LTO drives and a 17 tape cartridge magazine. Below that is the backup server that drives the tape robot, and under that is its 3TB SATA RAID disk shelf.

Below that is the other second-generation Cyrus machine, and the warm spare webmail and lists servers.

This photo gives you a better view of the tops of the racks, which house the keyboard/video console multiplexer at the front, the network switches at the back, and a small blue serial console server.

A view of the back of the rack. You can see the power distribution, the serial console cabling (blue), the private network (green) and the public network (yellow). The computers have 100Mbit connections and the switch uplinks are gigabit. The orange cable is the fibrechannel connection between the backup server and its disks.

Tom had some fun when taking photos...

From this angle you can see the old Hermes racks, and in the distance some racks occupied by Unix Support and Windows Support.

Hermes did not look very different in summer 2004.

The main difference is that the bottom half of the right-hand rack held exim.org and the admin server, which were moved when the second-generation Cyrus machine was installed there. The computer that became a lists server was at that time a ppswitch machine.

The next two pictures show the New Hermes setup in April 2003, before it was brought into full service.

Nothing in the bottom of the right-hand rack.

In the place of the leftmost of the set of racks in the first 2005 picture is an old PDP11 rack which held our old admin/backup server. There's a network switch in the top, used for the old private admin network. Under that is a pair of tape drives for backups, then the admin server (prism), then its disks, and in the bottom is a cold spare replacement for prism.

In the other two racks, the lower 8 machines are half of the Cyrus cluster, the same as two years later. The upper 5 machines are ppswitch, which had recently been upgraded in order to run the spam and virus filtering software. The other machine belongs to another part of the CS.

This picture shows the final incarnation of the original Hermes architecture, which was based on an NFS file store shared by multiple Sun servers running Exim for message delivery and UW IMAP for message access.

This setup stopped taking new users in summer 2003 and was decommissioned in mid 2004.

The leftmost rack contains the fileservers, consisting of two NetApp F740 NFS servers each with two shelves of disks (totalling 280GB), and in the middle a DLT8000 tape backup drive each.

The next rack contains in the bottom half two of the front-end servers (yellow and green) which are Sun E220Rs each with a pair of disk packs on top. Above them are three machines that were ppswitch, then later webmail, and finally and miscellaneous test machines. Towards the top is a small blue serial console server and a couple of power strips.

On the table are the serial consoles which talk to the Suns and the NetApps via the console server. (We can also log into the console server remotely, so we don't have to go into the machine room too much.) Under the table are the other two front-end servers (red and orange) which are Sun E450s.

On the far right you can see part of the old admin server's rack, which you can see better in a previous picture.

A NetApp, halted then turned off for the last time, in July 2004. After the shut-down, they remained in the machine room reserving rack space for the future expansion of Hermes. They were finally removed to our office in January 2006.

I also have some pictures of burnt hardware from when Orange (one of the old Hermes front-end machines) nearly caught fire.

For a while there was a shed in the machine room.

Our sister institution, the Computer Laboratory, has a page of archive photographs of early machines that provided the University's Computing Service.

You might be interested to compare and contrast some pictures of Oxford's machine room, including their email service, Herald. (They have some historical photos too.)

<fanf2@cam.ac.uk>

$Id: photos.html,v 1.17 2006/03/06 11:01:15 fanf2 Exp $